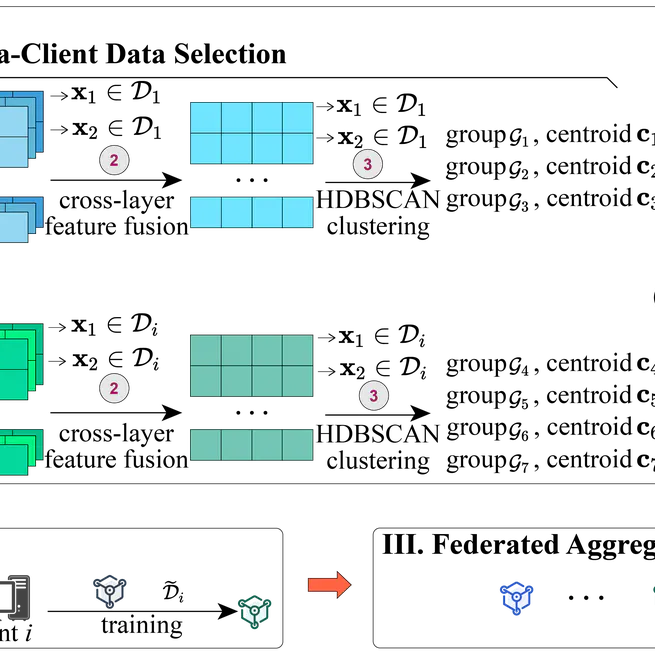

Federated Data-Efficient Instruction Tuning for Large Language Models

This work proposes a federated data-efficient instruction tuning approach for LLMs that significantly reduces the amount of data required for LLM tuning while enhancing the responsiveness of instruction-tuned LLMs to unseen tasks.

Oct 14, 2024

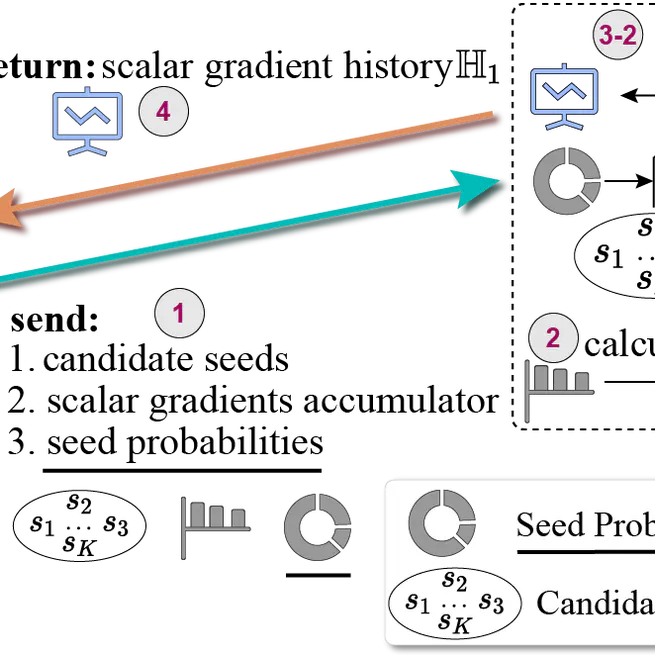

Federated Full-Parameter Tuning of Billion-Sized Language Models with Communication Cost under 18 Kilobytes

This work proposes a theory-informed method for federated full-parameter tuning of LLMs, which incurs <18KB communication cost per round for a 3B LLM, meanwhile delivering SOTA accuracy.

Jul 25, 2024

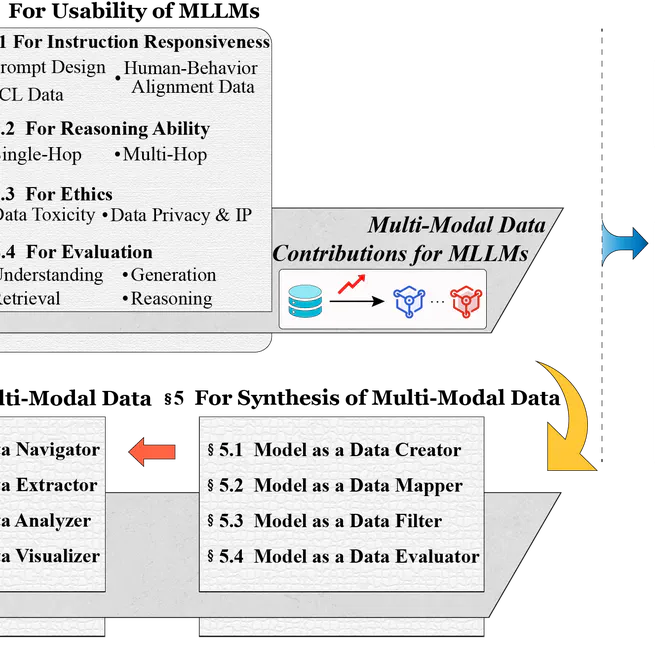

The Synergy between Data and Multi-Modal Large Language Models: A Survey from Co-Development Perspective

This survey investigates existing works related to multi-modal LLMs (MLLMs) from a data-model co-development perspective, and provides a roadmap for the future development of MLLMs.

Jul 11, 2024